Unleashing the Power of AI and HPC

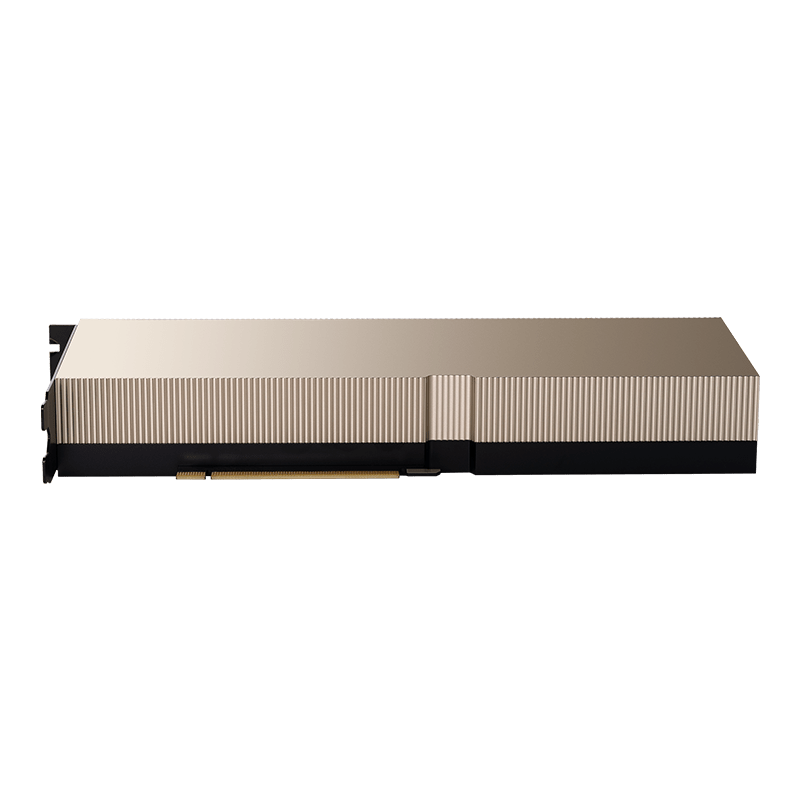

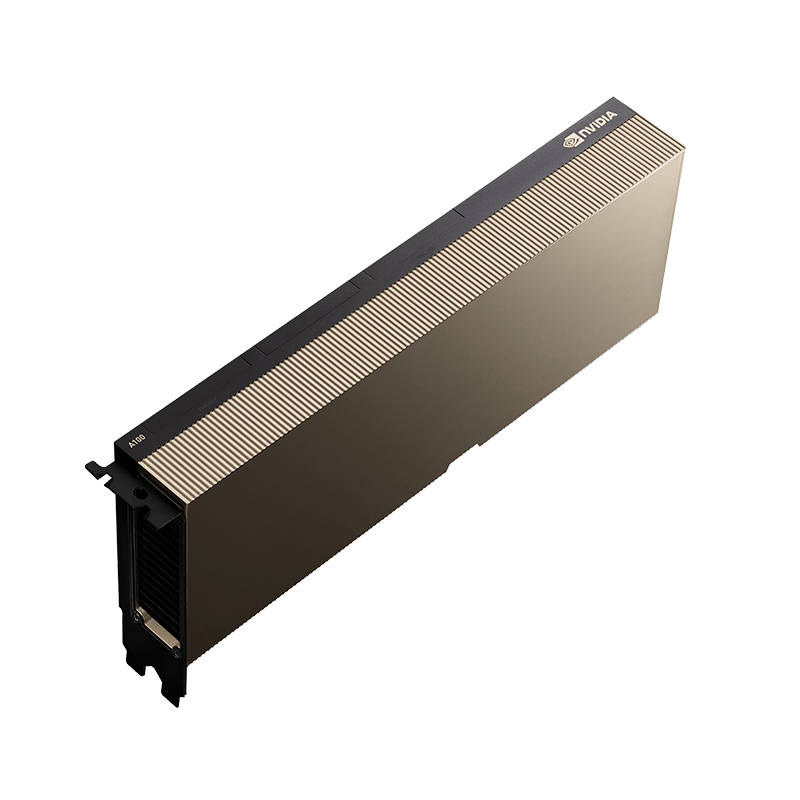

NVIDIA Tesla A100 PCIe Tensor Core GPU

40/80GB

Nvidia has been a frontrunner in high-performance computing and AI with its innovative GPU solutions. The Nvidia Tesla A100 PCIe epitomizes this innovation, catering to data centers and AI-driven tasks with unmatched capabilities. This article explores the Nvidia Tesla A100 PCIe in-depth, covering its features, uses, advantages, and considerations. Powered by the NVIDIA Ampere Architecture, the A100 Tensor Core GPU brings a seismic shift, delivering up to 20 times higher performance compared to its forerunner. Its unique adaptability to split into seven GPU instances allows dynamic adjustments to varying computational needs. Notably, the A100 80GB version boasts the world’s fastest memory bandwidth, exceeding 2 terabytes per second (TB/s), making it an ideal choice for effortlessly handling large-scale models and datasets.

Specifications

FP64 | 9.7 TFLOPS |

FP64 Tensor Core | 19.5 TFLOPS |

FP32 | 19.5 TFLOPS |

Tensor Float 32 (TF32) | 156 TFLOPS | 312 TFLOPS* |

BFLOAT16 Tensor Core | 312 TFLOPS | 624 TFLOPS* |

FP16 Tensor Core | 312 TFLOPS | 624 TFLOPS* |

INT8 Tensor Core | 624 TOPS | 1248 TOPS* |

GPU Memory | 40/80GB HBM2e |

GPU Memory Bandwidth | 1,935 GB/s |

Max Thermal Design Power (TDP) | 300W |

Multi-Instance GPU | Up to 7 MIGs @ 10GB |

Form Factor | PCIe |

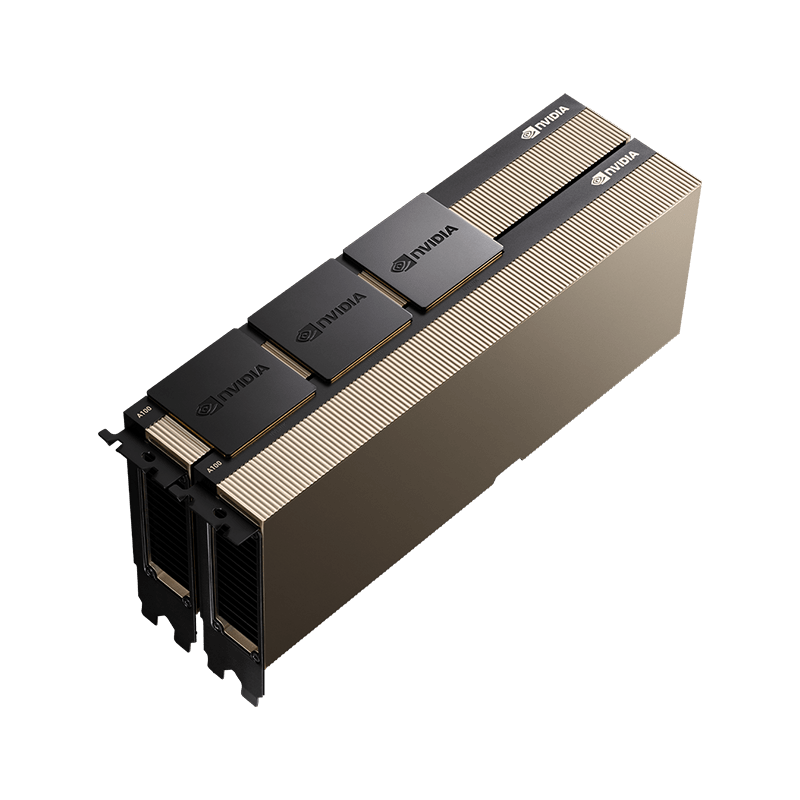

Interconnect | NVIDIA® NVLink® Bridge |

Server Options | Partner and NVIDIA-Certified Systems™ with 1-8 GPUs |

The Marvel of Ampere Architecture

Central to the A100 PCIe’s dominion is the formidable Ampere architecture, a symphony of intricate design. Within its structure harmoniously blends Tensor Cores and Multi-Instance GPU (MIG) technology, unfurling a realm of potential that defies all precedent. This convergence breathes life into unprecedented capabilities, propelling AI and GPU-accelerated endeavors to extraordinary heights.

Elevated Performance with Third-Generation Ray Tracing Cores

NVIDIA’s A100 Tensor Cores deliver a remarkable 20X surge over Volta, backed by a 2X boost from mixed precision and FP16. Scaling to thousands of A100 GPUs becomes feasible with NVLink®, NVSwitch™, PCI Gen4, InfiniBand®, and Magnum IO™ SDK. 2,048 A100 GPUs set a BERT training record in under a minute. The A100 80GB triples throughput for tasks like DLRM over A100 40GB. NVIDIA’s MLPerf leadership solidifies its AI training excellence. A100 redefines inference, offering 2X performance. BERT achieves a 249X throughput increase over CPUs, and models like RNN-T see a 1.25X surge on A100 80GB’s expanded memory. NVIDIA’s 20X surge in MLPerf Inference reinforces its pioneering role.

Tensor Cores : The AI Powerhouse

Embedded at the core of A100 PCIe’s supremacy, Tensor Cores emerge as the driving engines of unparalleled AI excellence. Their lightning-fast matrix multiplication capabilities act as a dynamic catalyst, propelling both training and inference processes into realms of astonishing speed and efficiency. With each calculation, they unfurl the canvas of accelerated AI breakthroughs, painting a portrait of innovation that redefines the limits of what’s achievable.

Enterprises Embrace the A100 PCIe Advantage

A100 with MIG revolutionizes GPU-accelerated infrastructure utilization. A single A100 GPU morphs into seven independent instances through MIG, granting multiple users access to GPU acceleration. A100 40GB allocates 5GB per MIG instance, while A100 80GB’s expanded memory doubles it to 10GB. MIG seamlessly collaborates with Kubernetes, containers, and hypervisor-based server virtualization. This integration empowers infrastructure managers to provide aptly sized GPUs with assured quality of service (QoS) for each task. The result? Accelerated computing resources extend to every user, democratizing the realm of GPU-accelerated power.

High Performance Data Analytics

Data scientists face the challenge of turning massive datasets into insights. A100-accelerated servers offer not just compute power, but also massive memory, high bandwidth, and scalability through NVLink® and NVSwitch™. In tandem with InfiniBand, NVIDIA Magnum IO™, and RAPIDS™ suite, including the RAPIDS Accelerator for Apache Spark, the NVIDIA data center platform delivers exceptional performance. A100 80GB shines, delivering a 2X increase over A100 40GB in big data analytics benchmarks, making it an ideal choice for emerging workloads with expanding datasets.

Upto 80GB of HBM2e GPU Memory

The A100 PCIe raises the bar with its extraordinary performance leap, boasting up to 20 times higher computational power compared to its predecessors. This monumental Memory Marvel: The 80GB Milestone. Marking a significant milestone, the A100 PCIe 80GB version showcases the world’s fastest memory bandwidth, exceeding a staggering 2 terabytes per second. This unrivalled memory speed empowers the GPU to handle complex AI models and massive datasets effortlessly.

The Nvidia Tesla A100 PCIe embodies a revolutionary leap in the realm of AI and high-performance computing, reshaping the technological landscape with its unparalleled capabilities. With its potent blend of the Ampere architecture, Tensor Cores, and Multi-Instance GPU (MIG) technology, it spearheads innovation, accelerating AI research and transforming complex simulations into tangible insights. This powerhouse GPU excels in deep learning training, inference, and high-performance computing, boasting exceptional benchmarks and memory capacities that redefine computational possibilities. It stands as a beacon of advancement, democratizing GPU-accelerated power and steering us into an era where autonomous systems and groundbreaking discoveries become the norm. The A100 PCIe marks the dawn of a new automated future, where intelligence and efficiency converge to redefine the boundaries of innovation.

FAQ's

Popular Questions

-

How does the A100 PCIe's architecture impact AI performance?

The Ampere architecture, coupled with Tensor Cores and MIG technology, empowers the A100 PCIe with unparalleled AI capabilities.

-

Can the A100 PCIe handle diverse AI workloads simultaneously?

Yes, the MIG technology allows the A100 PCIe to efficiently manage and cater to various AI workloads in real time.

-

What industries can benefit from the A100 PCIe?

The A100 PCIe's versatility extends to numerous industries, including healthcare, finance, and autonomous systems.

-

How does the A100 PCIe contribute to sustainable computing?

The A100 PCIe's enhanced performance per watt ratio promotes energy-efficient computing, contributing to sustainability efforts.

-

What is the difference between Nvidia Tesla and other GPUs?

Nvidia Tesla GPUs are specialized for data center workloads, particularly HPC and AI applications. They boast higher computing power and dedicated AI features like Tensor Cores, making them ideal for scientific research and deep learning.

-

Can Nvidia Tesla A100 PCIe be used for gaming?

The primary purpose of the Nvidia Tesla A100 PCIe is not gaming. It is designed for data centers and enterprise-level workloads, focusing on HPC and AI tasks.

-

Is Nvidia Tesla A100 PCIe compatible with all server configurations?

The Tesla A100 PCIe is compatible with servers that have PCIe slots and meet the power and cooling requirements for the GPU. However, it's essential to verify compatibility with specific server models

-

How does Nvidia Tesla A100 PCIe enhance AI performance?

The Nvidia Tesla A100 PCIe's dedicated Tensor Cores and advanced architecture enable faster AI training and inference, significantly accelerating AI workloads.

-

What is the expected lifespan of Nvidia Tesla A100 PCIe?

The lifespan of the A100 PCIe depends on its usage, maintenance, and future developments. Typically, data centers expect to use Tesla GPUs for several years before considering an upgrade.

-

What is the estimated delivery of Tesla A100 PCIe if ordered?

Once you confirm your order with us, you can anticipate delivery within a week. However, please note that this timeline is contingent upon the product's availability. If you have any specific inquiries or require further information about the product, please do not hesitate to get in touch with us or our dedicated sales team. We are here to provide you with the best possible service and assistance.