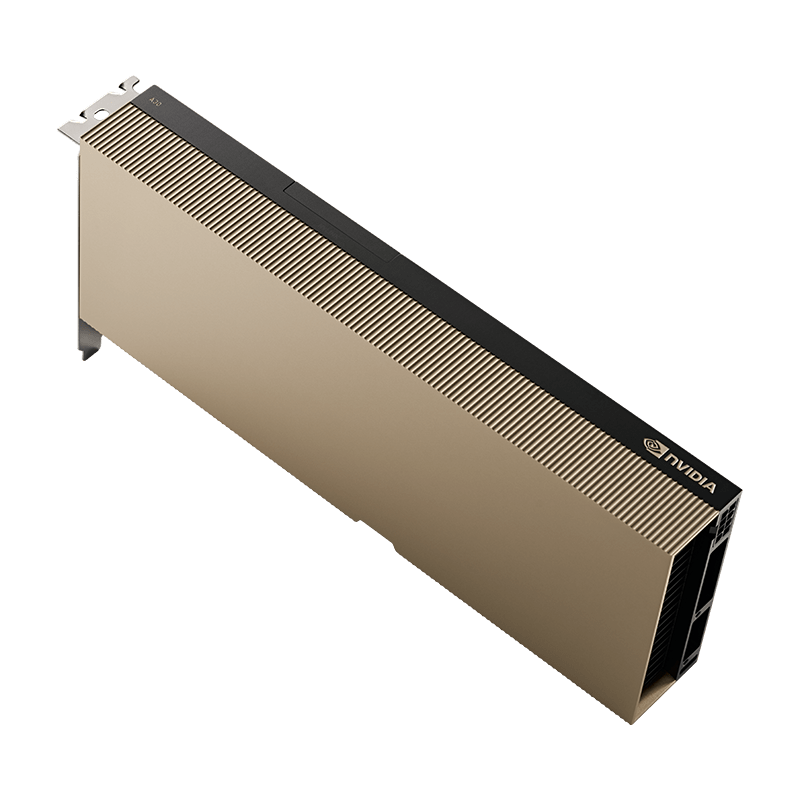

Powering the future of AI and HPC

NVIDIA Tesla A30 Tensor Core GPU

24GB

Introducing the NVIDIA A30 Tensor Core GPUs, a powerhouse designed for diverse enterprise workloads. Leveraging NVIDIA’s Ampere architecture Tensor Cores and Multi-Instance GPU (MIG), it offers secure speed boosts for AI inference at scale and high-performance computing tasks. With its PCIe form factor, fast memory bandwidth, and low power consumption, the A30 is tailored for mainstream servers, enabling flexible data center capabilities while delivering maximum value for enterprises. This accelerator marks Nvidia’s response to the burgeoning demands of data centers, cloud computing, and AI, redefining the computing landscape with its versatility and potent performance.

Specifications

| FP64 | 5.2 teraFLOPS | |

| FP64 Tensor Core | 10.3 teraFLOPS | |

| FP32 | 10.3 teraFLOPS | |

| TF32 Tensor Core | 82 teraFLOPS | 165 teraFLOPS* | |

| BFLOAT16 Tensor Core | 165 teraFLOPS | 330 teraFLOPS* | |

| FP16 Tensor Core | 165 teraFLOPS | 330 teraFLOPS* | |

| INT8 Tensor Core | 330 TOPS | 661 TOPS* | |

| INT4 Tensor Core | 661 TOPS | 1321 TOPS* | |

| Media engines | 1 optical flow accelerator (OFA) 1 JPEG decoder (NVJPEG) 4 video decoders (NVDEC) | |

| GPU memory | 24GB HBM2 | |

| GPU memory bandwidth | 933GB/s | |

| Interconnect | PCIe Gen4: 64GB/s Third-gen NVLINK: 200GB/s** | |

| Form factor | Dual-slot, full-height, full-length (FHFL) | |

| Max thermal design power (TDP) | 165W | |

| Multi-Instance GPU (MIG) | 4 GPU instances @ 6GB each 2 GPU instances @ 12GB each 1 GPU instance @ 24GB | |

| Virtual GPU (vGPU) software support | NVIDIA AI Enterprise NVIDIA Virtual Compute Server | |

Architecture and Performance

The Nvidia Tesla A30, powered by the groundbreaking Ampere architecture, marks a significant advancement in GPU design. Boasting 8,192 CUDA cores and 24GB of high-speed GDDR6 memory, the Tesla A30 effortlessly tackles vast datasets and intricate calculations. Its exceptional parallel processing capabilities position it as the preferred option for tasks demanding immense computational power. With the Tesla A30, high-performance computing has reached new heights, propelling research and industries toward unparalleled achievements.

Scalability and Flexibility

The Nvidia Tesla A30 brings unmatched scalability to businesses, enabling effortless infrastructure expansion tailored to specific needs. Its impressive flexibility seamlessly adapts to various workloads, ensuring a versatile solution that meets dynamic computing demands. This freedom allows organizations to optimize operations and achieve unparalleled efficiency and performance levels. Moreover, the Tesla A30 seamlessly integrates into existing infrastructures, simplifying deployment and reducing complexities. Its compatibility with popular frameworks and software makes it a user-friendly choice for developers. This cutting-edge GPU technology not only maximizes existing infrastructure investments but also ensures optimal performance and productivity in AI and HPC applications across diverse industries.

Deep Learning and Training Inference

NVIDIA A30 redefines AI training with exceptional power using TF32 and FP16, achieving up to 20X higher throughput than NVIDIA T4. With NVLink and PCIe Gen4, it scales seamlessly. A30 sets MLPerf records, handles dynamic workloads, and delivers 2X performance for inference tasks with structural sparsity support. Enhanced by NVIDIA Triton™ Inference Server, A30 ensures easy AI deployment at scale. The Nvidia Tesla A30 excels in AI and deep learning, accelerating tasks like natural language processing, computer vision, and recommendation systems, driving AI to new performance frontiers.

Power Efficiency and Enterprise Ready Utilization

The Tesla A30’s Ampere architecture offers exceptional power efficiency, enabling substantial carbon footprint reduction while managing intensive workloads. Embracing the Tesla A30 promotes eco-friendly practices without sacrificing performance, fostering sustainability in computing for a greener future. A30 with MIG enhances GPU-accelerated infrastructure utilization by partitioning a GPU into up to four independent instances, providing multiple users with GPU acceleration. Its seamless integration with Kubernetes, containers, and hypervisor-based server virtualization allows infrastructure managers to offer custom GPUs with guaranteed QoS for every task, ensuring accessibility to accelerated computing resources for all users.

Deployment and Support

NVIDIA A30, integrated into NVIDIA-Certified Systems™ by trusted OEM partners, combines compute acceleration and secure networking within enterprise servers. This empowers customers to easily deploy systems capable of running traditional and modern AI applications from the NVIDIA NGC catalogue. This high-performance, scalable infrastructure enables businesses to unleash AI’s potential and drive innovation. Nvidia offers tailored comprehensive support for Tesla A30, ensuring seamless operations and timely assistance. Their dedicated team optimizes A30 usage, resolves issues promptly, and ensures productivity. For further assistance, reach out to Mediasys support for a smooth resolution. Your satisfaction is our priority, committed to delivering exceptional support for your needs.

VRAM and Memory Bandwidth

With its impressive 24GB of GDDR6 memory, the Tesla A30 ensures efficient data handling, effectively mitigating data bottlenecks and elevating overall performance. Moreover, this GPU boasts ample storage capacity, enabling seamless management of large datasets without sacrificing speed. The Tesla A30 stands ready to meet the most demanding computational tasks with grace, making it a formidable asset for data-intensive applications and high-performance computing endeavors.

Enhanced Virtual Desktop Experience

The Tesla A30 assumes a pivotal role in virtual desktop infrastructure, significantly enhancing the user experience by providing accelerated graphics and improved application performance. With the Tesla A30 at the helm, virtual desktop environments become more seamless and responsive, empowering users with a smooth and immersive computing experience. Its contribution to virtual desktop infrastructure marks a substantial leap forward in optimizing productivity and user satisfaction in the digital workspace.

The Nvidia Tesla A30, integrating Ampere architecture Tensor Cores and Multi-Instance GPU (MIG), stands out as a versatile solution for enterprise workloads, delivering accelerated performance for AI inference and high-performance computing tasks. Designed for mainstream servers, it effectively addresses data center, cloud computing, and AI demands with its PCIe form factor, fast memory bandwidth, and low power consumption. This GPU excels in AI and deep learning, leveraging advanced Tensor Cores for quicker training and inference, empowering applications in natural language processing, computer vision, and recommendation systems. A30 optimizes GPU-accelerated infrastructure utilization with MIG, ensuring multiple users gain GPU acceleration, while its power efficiency and scalability make it an adaptable choice for diverse workloads. Supported by comprehensive services from Nvidia, it emerges as a reliable solution, further solidifying its position as a key partner in delivering high-quality GPU solutions for varied computing needs.

FAQ's

Popular Questions

-

Can the Nvidia Tesla A30 be used for gaming?

While the Tesla A30 is primarily designed for AI and HPC tasks, it is not optimized for gaming. Gamers should consider Nvidia's gaming-oriented GPUs instead.

-

Does the Tesla A30 require additional cooling solutions?

Yes, due to its high-performance nature, the Tesla A30 may require adequate cooling solutions to ensure optimal performance and longevity.

-

Is the Tesla A30 compatible with all AI frameworks?

Yes, the Tesla A30 is compatible with popular AI frameworks like TensorFlow, PyTorch, and others.

-

Can the Tesla A30 be used in a multi-GPU configuration?

Yes, the Tesla A30 can be used in multi-GPU setups to further enhance compute performance.

-

What is the warranty period for the Nvidia Tesla A30?

The warranty period for the Nvidia Tesla A30 may vary depending on the region and seller, so it is recommended to check with the manufacturer or authorized dealers for specific details.

-

What support does Mediasys provide with Nvidia Cards?

For any minor hardware or software-related issues, such as drivers, performance, or compatibility, Mediasys offers first-hand support. Moreover, our dedicated support team is readily available to assist you further, and we are here to help with warranty replacements if needed. Your satisfaction is our priority, and we are committed to ensuring a seamless and hassle-free experience for all your support needs. Don't hesitate to reach out to us for any assistance you may require.

-

Where can I get access to the NVIDIA A30 Tesla GPU for my business needs in the UAE?

For information on accessing the A30 Tesla GPU, visit NVIDIA's official website or reach out to authorized resellers for personalized solutions. We, Mediasys are one of the Authorized resellers and provide tailor made solutions for your needs across India and the Middle East. You can alternatively also contact us at info@mediasysdubai.com

-

What is the estimated delivery of Tesla A30 if ordered?

Once you confirm your order with us, you can anticipate delivery within a week. However, please note that this timeline is contingent upon the product's availability. If you have any specific inquiries or require further information about the product, please do not hesitate to get in touch with us or our dedicated sales team. We are here to provide you with the best possible service and assistance.