Entry-level GPU Harnessing NVIDIA's AI Capabilities for Universal Server Integration

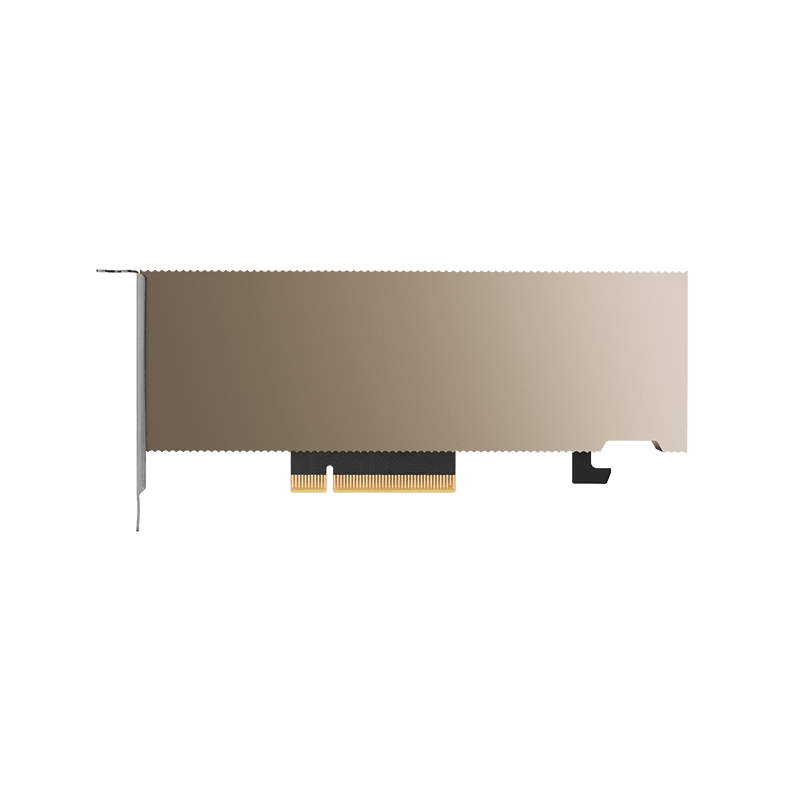

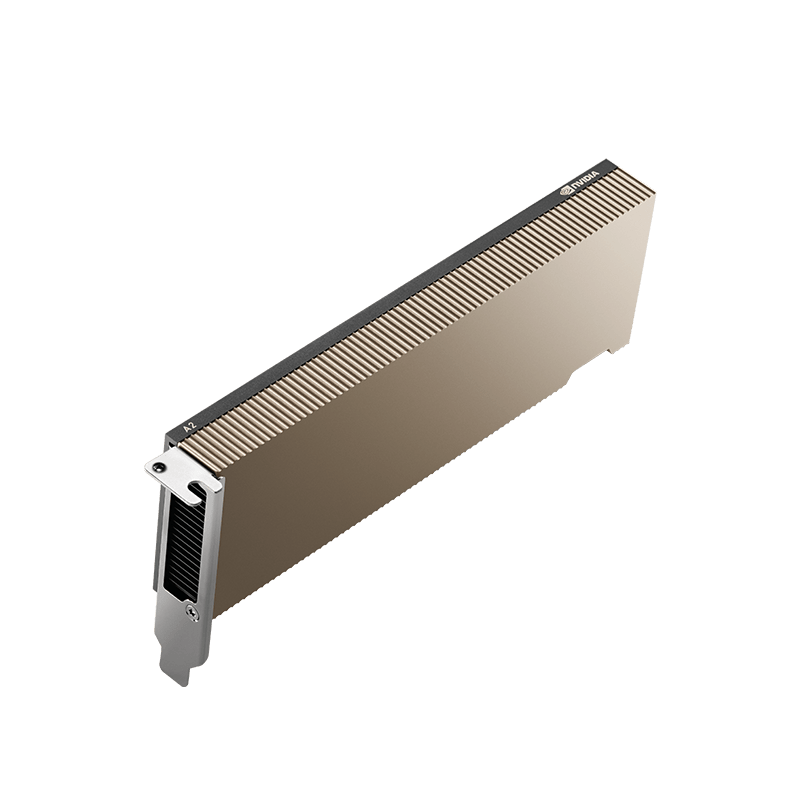

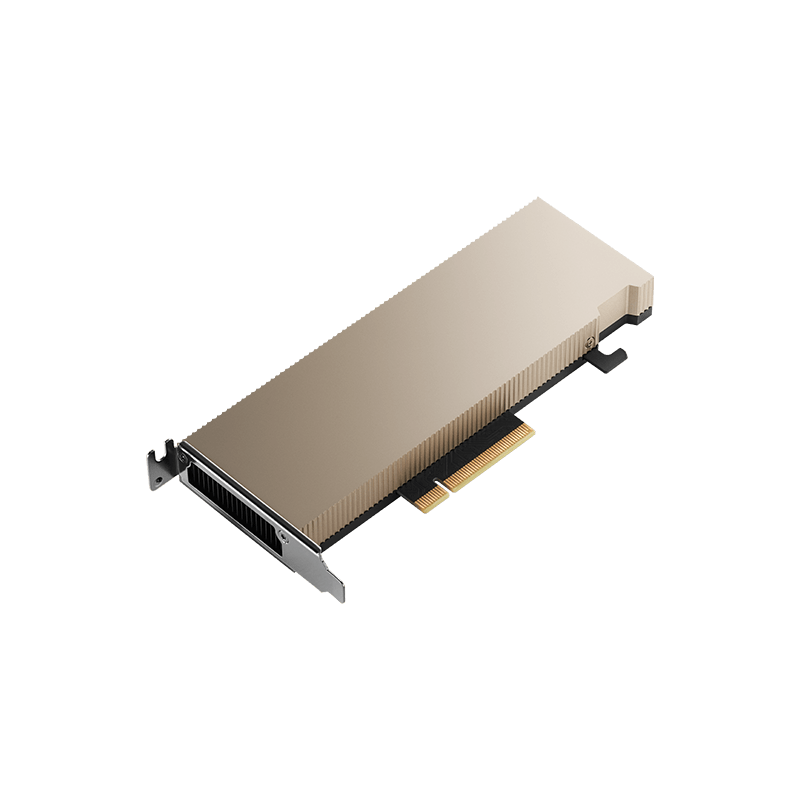

NVIDIA Tesla A2 - Tensor Core GPU

16GB

The NVIDIA A2 Tensor Core GPU is a highly advanced graphics processing unit specifically designed to accelerate AI workloads. It is built on the latest architecture and incorporates tensor cores, which are specialized hardware units optimized for AI computations. The A2 Tensor Core GPU is equipped with cutting-edge features that enable efficient and high-performance AI processing. In the realm of GPU technology, NVIDIA has developed the A2 Tensor Core GPU, an entry-level solution that brings NVIDIA AI to every server. This groundbreaking GPU empowers businesses and organizations to leverage the power of AI for their operations. In this article, we will delve into the features, use cases, and benefits of the NVIDIA A2 Tensor Core GPU.

Specifications

| GPU Architecture | NVIDIA Ampere |

| CUDA Cores | 1280 |

| Tensor Cores | 40 | Gen 3 |

| RT Cores | 108 Gen 2 |

| Peak FP32 | 4.5 TFLOPS |

| Peak TF32 Tensor Core | 9 TFLOPS | 18 TFLOPS Sparsity |

| Peak FP16 Tensor Core | 18 TFLOPS | 36 TFLOPS Sparsity |

| INT8 | 36 TOPS | 72 TOPS Sparsity |

| INT4 | 72 TOPS | 144 TOPS Sparsity |

| GPU Memory | 16 GB GDDR6 ECC |

| Memory Bandwidth | 200 GB/s |

| Thermal Solution | Passive |

| Maximum Power Consumption | 40-60 Watt | Configurable |

| System Interface | PCIe Gen 4.0 x8 |

NVIDIA A2 Tensor Core GPU: Powerful AI Inference at the Edge

The NVIDIA A2 Tensor Core GPU offers an entry-level solution for efficient inference processing with low power consumption, a compact form factor, and impressive performance, making it an ideal choice for NVIDIA AI at the edge. With its low-profile PCIe Gen4 card and configurable thermal design power (TDP) capability ranging from 40W to 60W, the A2 Tensor Core GPU brings versatile inference acceleration to any server, enabling seamless deployment at scale.

Superior IVA Performance for the Intelligent Edge

With NVIDIA A2 GPUs, servers excel in intelligent edge applications, such as smart cities, manufacturing, and retail, providing up to 1.3X greater performance. When it comes to IVA workloads, NVIDIA A2 GPUs enable highly efficient deployments, delivering up to 1.6X better price-performance and 10% improved energy efficiency compared to previous GPU generations.

AI Inference Breakthrough: Up to 20X Faster

AI inference has revolutionized consumer experiences, enabling real-time responsiveness and unlocking insights from a multitude of sensors and cameras. By harnessing the power of NVIDIA A2 Tensor Core GPUs, edge and entry-level servers witness an astounding up to 20X increase in inference performance, transforming any server into a modern AI powerhouse instantaneously. One of the standout features of the A2 Tensor Core GPU is its AI capabilities. It is specifically optimized for AI workloads, allowing for faster training and inferencing of AI models. The tensor cores integrated into the GPU accelerate matrix operations and enable the execution of complex AI algorithms. This makes the A2 Tensor Core GPU an ideal choice for machine learning tasks and AI-driven applications.

Enhanced Performance

The A2 Tensor Core GPU offers a significant performance boost compared to traditional CPUs. Its specialized architecture and dedicated AI hardware result in faster processing times and improved efficiency. By harnessing the power of the A2 Tensor Core GPU, organizations can achieve accelerated AI workloads, leading to quicker insights and increased productivity. With its tensor cores and optimized AI capabilities, the A2 Tensor Core GPU ensures efficient processing of AI workloads. It minimizes the time required for training and inferencing tasks, allowing organizations to iterate and experiment with AI models more rapidly. The efficiency of the A2 Tensor Core GPU translates to cost savings and increased productivity for businesses.

Driving AI Inference Performance: Cloud, Data Center, and Edge

AI inference powers innovation across industries like healthcare, finance, retail, and more. The A2 GPU, alongside NVIDIA’s A100 and A30 Tensor Core GPUs, forms a complete AI inference portfolio for cloud, data center, and edge. With fewer servers and lower power consumption, A2 and NVIDIA’s portfolio offer faster insights at a reduced cost. The NVIDIA A2 GPU is meticulously optimized to handle inference workloads and seamlessly integrate into entry-level servers with space and thermal constraints, including 5G edge and industrial environments. With its low-profile form factor and power-efficient design, the A2 operates within a power envelope ranging from 60W down to 40W, making it the ideal choice for any server.

The Nvidia Tesla A2 GPU is a cutting-edge solution tailored for AI, machine learning, research, and analytics. With specialized Tensor Cores enhancing its architecture, it delivers accelerated performance for complex tasks. Boasting high computational power, optimized AI capabilities, and efficiency, it’s favored across industries. Leveraging the A2 brings benefits like enhanced performance, efficient AI processing, and cost-effectiveness, providing a competitive edge. Its balanced performance, efficiency, and cost make it an attractive choice, redefining high-performance computing across sectors.

FAQ's

Popular Questions

-

What is the price of the A2 Tensor Core GPU?

The pricing of the A2 Tensor Core GPU varies depending on the specific model and configuration. For accurate and up-to-date pricing information, it is recommended to visit the official NVIDIA website or contact authorized resellers.

-

Can the A2 Tensor Core GPU be used for gaming?

While the A2 Tensor Core GPU is primarily designed for AI workloads, it can also handle gaming tasks. However, for optimal gaming performance, NVIDIA offers dedicated GPUs specifically tailored for gaming purposes.

-

Is the A2 Tensor Core GPU compatible with all servers?

The A2 Tensor Core GPU is compatible with a wide range of servers, but it is essential to check the system requirements and compatibility specifications before making a purchase. NVIDIA provides detailed documentation and support to help users ensure compatibility with their server configurations.

-

Does the A2 Tensor Core GPU require additional cooling?

As with most high-performance GPUs, the A2 Tensor Core GPU generates heat during operation. It is recommended to ensure proper cooling in server environments to maintain optimal.

-

How to buy Nvidia Tesla A2 Tensor Core GPU in UAE?

You can contact us at info@mediasysdubai.com