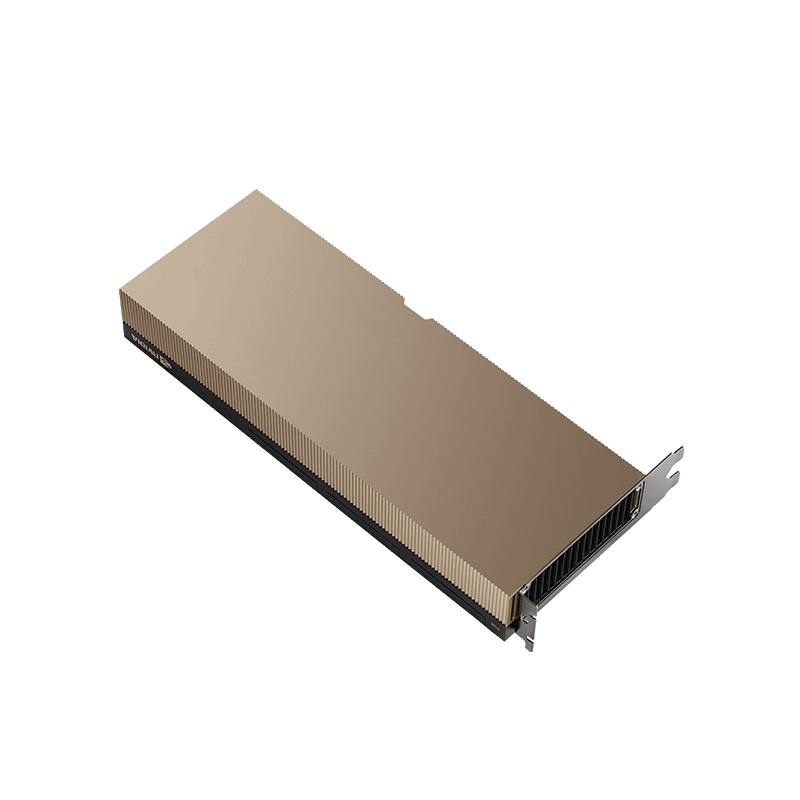

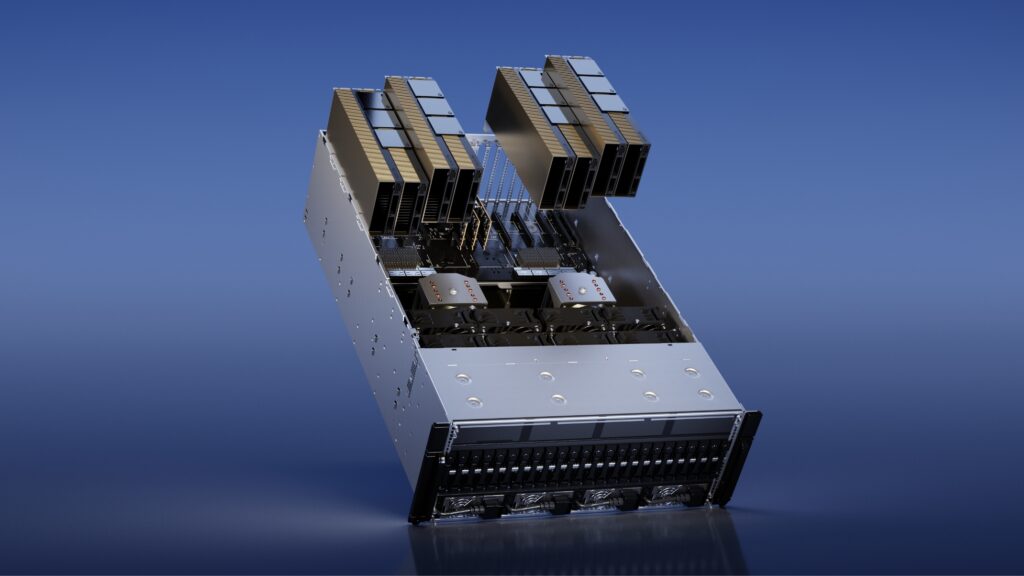

Unrivalled performance, scalability, and security tailored for every data center.

NVIDIA Tesla H100 Tensor Core GPU

80GB

In the dynamic realm of AI and high-performance computing, Nvidia consistently leads with pioneering innovations. Their latest creation, the Nvidia Tesla H100, has captured the tech world’s attention. This article offers a comprehensive examination of the Nvidia Tesla H100, detailing its features and capabilities, and forecasting its impact on the AI landscape. This GPU boasts unparalleled performance, scalability, and security through its NVIDIA® H100 Tensor Core GPU, facilitating connectivity of up to 256 GPUs via the NVIDIA NVLink® Switch System. With a dedicated Transformer Engine, it achieves a remarkable 30X acceleration in large language models (LLMs) compared to its precursor, setting a new standard for cutting-edge conversational AI.

Specifications

FP64 | 26 teraFLOPS |

FP64 Tensor Core | 51 teraFLOPS |

FP32 | 51 teraFLOPS |

TF32 Tensor Core | 756 teraFLOPS2 |

BFLOAT16 Tensor Core | 1,513 teraFLOPS2 |

FP16 Tensor Core | 1,513 teraFLOPS2 |

FP8 Tensor Core | 3,026 teraFLOPS2 |

INT8 Tensor Core | 3,026 TOPS2 |

GPU memory | 80GB |

GPU memory bandwidth | 2TB/s |

Decoders | 7 NVDEC |

Max thermal design power (TDP) | 300-350W (configurable) |

Multi-Instance GPUs | Up to 14 MIGS @ 12GB |

Form factor | PCIe |

Interconnect | NVLink: 600GB/s |

Server options | Partner and |

NVIDIA AI Enterprise | Included |

Driven by the Grace Hopper Architecture

Fueling the NVIDIA Grace Hopper CPU+GPU architecture, the Hopper Tensor Core GPU is tailor-made for terabyte-scale accelerated computing, propelling large-model AI and HPC to unprecedented heights with a staggering 10X performance boost. Anchored by the NVIDIA Grace CPU, ingeniously crafted on the adaptable Arm® architecture, this architecture is purpose-built for accelerated computing from the ground up. The Hopper GPU seamlessly integrates with the Grace CPU, facilitated by NVIDIA’s rapid chip-to-chip interconnect, ushering in an astonishing 900GB/s of bandwidth—7X quicker than PCIe Gen5. This groundbreaking union facilitates an astounding 30X surge in aggregate system memory bandwidth for the GPU, outperforming today’s fastest servers. Moreover, it empowers applications dealing with terabytes of data, boasting up to 10X greater performance. This transformative design sets a new standard for AI and HPC, ensuring unmatched performance on a grand scale.

Real-Time Deep Learning Inference Redefined

The realm of AI addresses an extensive spectrum of business challenges, employing a diverse range of neural networks. An exceptional AI inference accelerator must not only excel in delivering peak performance but also possess the adaptability to expedite these intricate networks. The H100 goes beyond to elevate NVIDIA’s preeminent leadership in inference, introducing multiple innovations that amplify inference speeds by an astounding factor of up to 30X while maintaining the lowest latency. With the incorporation of fourth-generation Tensor Cores, enhancements span all precisions, encompassing FP64, TF32, FP32, FP16, INT8, and now FP8. This comprehensive augmentation not only conserves memory usage but also bolsters performance, all the while upholding the crucial accuracy demanded by Large Language Models (LLMs) and similar applications.

Revolutionary Fourth-Gen Tensor Cores

The H100 introduces a powerhouse of innovation, boasting fourth-generation Tensor Cores and a Transformer Engine armed with FP8 precision. This dynamic combination accelerates training for GPT-3 (175B) models by an impressive 4X compared to its predecessor. The amalgamation of fourth-generation NVLink, offering an impressive 900 gigabytes per second (GB/s) of GPU-to-GPU interconnect, along with NDR Quantum-2 InfiniBand networking that enhances cross-node GPU communication, PCIe Gen5, and the cutting-edge NVIDIA Magnum IO™ software, ensures seamless scalability. From compact enterprise systems to extensive unified GPU clusters, the H100 GPUs deliver unmatched efficiency. Scaling up to data center proportions, the deployment of H100 GPUs yields exceptional performance, ushering in the next era of exascale high-performance computing (HPC) and trillion-parameter AI, accessible to researchers across the spectrum.

Surpassing Exascale in High-Performance Computing

The NVIDIA data center platform constantly defies Moore’s law, consistently delivering performance improvements. With H100’s breakthrough AI capabilities, the synergy of HPC+AI accelerates discovery for researchers tackling global challenges. The H100 triples the FLOPS of double-precision Tensor Cores, achieving 60 teraflops of FP64 computing for HPC. Its TF32 precision empowers AI-fused HPC apps to achieve one petaflop of throughput for single-precision matrix-multiply operations. H100’s DPX instructions offer 7X better performance than A100 and 40X speedups over CPUs for dynamic programming algorithms like Smith-Waterman in DNA and protein alignment.

Empowered Data Analytics Unleashed

Data analytics is a time-consuming facet of AI application development. In the realm of vast and distributed datasets, scale-out solutions relying solely on commodity CPU servers often falter, lacking the requisite scalable computing power. Here, the H100-powered accelerated servers shine, ushering in unparalleled compute prowess. With an impressive memory bandwidth of 3 terabytes per second (TB/s) per GPU and the scalability offered by NVLink and NVSwitch™, these servers effectively tackle data analytics with peak performance and seamlessly expand to accommodate massive datasets. When coupled with NVIDIA Quantum-2 InfiniBand, the Magnum IO software, GPU-accelerated Spark 3.0, and NVIDIA RAPIDS™, the NVIDIA data center platform emerges as a singular force in expediting these colossal workloads. It does so with an unmatched blend of performance and efficiency, carving a unique path in the realm of data acceleration.

80GB of GPU Memory for complex AI calculations

The Nvidia Tesla H100 GPU is equipped with 80GB of high-speed memory interfaces, offering substantial memory bandwidth to facilitate efficient data processing and computing tasks. The specific details regarding the memory capacity and bandwidth of the Nvidia Tesla H100 GPU can vary based on the configuration and specifications of the particular model. For precise and up-to-date information on the memory capacity and bandwidth of the Nvidia Tesla H100 GPU, it’s recommended to refer to the official Nvidia documentation or specifications provided by the manufacturer.

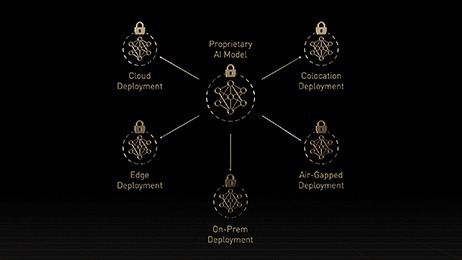

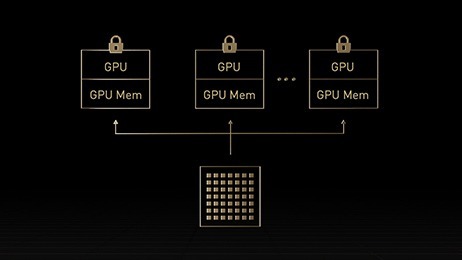

Incorporated Confidential Computing

NVIDIA Confidential Computing, integrated into the NVIDIA Hopper™ architecture, revolutionizes data security. It empowers the H100 as a groundbreaking accelerator with confidential computing capabilities, safeguarding data integrity and privacy while leveraging the H100 GPUs’ exceptional speed. Establishing a robust hardware-based trusted execution environment (TEE), it secures and isolates workloads—whether on a single H100 GPU, multiple GPUs in a node, or individual MIG instances. This seamless encapsulation eliminates the need for partitioning, ensuring GPU-accelerated applications operate smoothly within the TEE. This fusion harmonizes NVIDIA’s AI and HPC software potency with a hardware root of trust, elevating data protection to unparalleled levels.

Geared up for Enterprise - Ready Utilization

The widespread integration of AI within enterprises has become the norm, prompting organizations to seek comprehensive, AI-enabled infrastructure that propels them into this evolving epoch. NVIDIA’s H100 GPUs, tailored for mainstream servers, offer a five-year subscription bundled with enterprise support for the NVIDIA AI Enterprise software suite. This all-inclusive package streamlines AI adoption by delivering unmatched performance. This ensures that organizations have unfettered access to essential AI frameworks and tools necessary to construct H100-accelerated AI workflows, spanning AI chatbots, recommendation engines, vision AI, and more.

In the relentless pursuit of innovation within the domains of artificial intelligence and high-performance computing, the Nvidia Tesla H100 stands as a transformative force. Its monumental capabilities, ranging from unparalleled processing power to revolutionizing real-time applications and reshaping diverse industries, herald a future where the boundaries of achievement are continually redefined. By empowering researchers, accelerating AI breakthroughs, and providing a beacon for industries spanning healthcare, finance, and entertainment, the Tesla H100 doesn’t merely represent a technological leap but signifies a paradigm shift in what’s possible. This GPU emerges not just as a game-changer but as a catalyst that propels us towards an era where the improbable becomes reality, paving the way for innovation and transformation across every sector it touches.

FAQ's

Popular Questions

-

How does the Nvidia Tesla H100 differentiate from its predecessors?

The Tesla H100 distinguishes itself with heightened processing power, AI-focused architecture, and remarkable energy efficiency compared to its predecessors.

-

Can the Nvidia Tesla H100 accommodate real-time AI applications?

Absolutely, the Tesla H100's speed and low latency render it ideal for demanding real-time AI tasks.

-

Is the Tesla H100 beneficial solely for researchers, or can businesses leverage it too?

Both researchers and businesses can harness the Tesla H100's capabilities, catering to AI development, research, and industry applications.

-

Which industries are likely to experience the most significant impact from the Tesla H100?

Industries such as healthcare, finance, autonomous vehicles, and entertainment are poised for substantial transformations owing to the Tesla H100's capabilities.

-

Can Mediasys make rackmount servers/systems powered by the Tesla H100?

Certainly, at Mediasys, we have the capability to craft a bespoke server tailored precisely to your specifications. Connecting with our dedicated Sales team is all it takes to initiate this process. Upon placing your order, our skilled engineers will meticulously configure the system according to your unique requirements, ensuring a seamless and personalized experience that perfectly aligns with your needs.

-

What support does Mediasys provide with Nvidia Cards?

For any minor hardware or software-related issues, such as drivers, performance, or compatibility, Mediasys offers first-hand support. Moreover, our dedicated support team is readily available to assist you further, and we are here to help with warranty replacements if needed. Your satisfaction is our priority, and we are committed to ensuring a seamless and hassle-free experience for all your support needs. Don't hesitate to reach out to us for any assistance you may require.

-

Does Mediasys tailor make servers featuring the Tesla H100 card?

Mediasys offers custom servers with single or multiple cards to meet your needs. We provide timely delivery and dedicated support for our systems. Your satisfaction is our priority.

-

How long does Mediasys take to deliver the order?

On average, the process usually takes around one week. For systems, we subject them to rigorous burn tests, pushing them to their limits to ensure that they maintain peak performance without any throttling. This meticulous testing guarantees that the systems deliver the optimal level of performance that meets your requirements.